TL;DR

- AI agents operate real dev tools to plan, run, verify, and fix tasks, accelerating repetitive work.

- They excel at scaffolding, CRUD, refactors, and cross-file edits; humans retain architecture and security oversight.

- Speed gains are real: base apps in minutes/hours versus days/weeks with traditional toolchains.

- The winning model is hybrid: humans set goals and review; agents execute with tests and guardrails.

- Choose by goal, control, skill, stack fit, and error handling; tools include AppWizzy, Lovable, Bolt.new, v0.dev, Copilot.

Fact Box

- Agents compress 50–70% of the development lifecycle; humans focus on architecture and domain-specific logic.

- Initial development: traditional days/weeks to scaffold; agents produce a functional base app in minutes/hours.

- An AI dev agent plans, executes, verifies, and iterates using real tools (Git, npm, Docker, tests), not just text.

- Agents run autonomous loops: run tests, read errors or logs, attempt fixes, and re-run until passing.

- Best-fit tasks: CRUD apps, internal tools, SaaS scaffolding, refactoring, and repetitive cross-file changes.

If you’ve ever wondered whether AI agents can actually build real web apps, or whether the industry is just showing you polished demo theater, this article cuts through the noise and gives you the most honest answer you’ll read this year.

Before we get into the details, consider the questions that brought you here:

- Can AI agents truly take over parts of the development workflow, or do they still crumble without human guidance?

- How do modern agentic platforms differ from the traditional IDEs, frameworks, and toolchains developers already rely on?

- What’s the safest, smartest, and most profitable way to introduce agents into a real engineering process, without breaking your system or your team?

As Alan Kay said, “The best way to predict the future is to invent it.” And in 2025, that future is being reinvented through agentic development, though not in the way hype videos suggest.

The transition toward AI-driven engineering is no longer theoretical. Leading labs and cloud vendors have published real studies and rolled out early production systems demonstrating both the promise and dangers of agentic coding: significant speed gains on repetitive tasks, paired with non-trivial risks like security oversights, hallucinated commands, and incorrect multi-step changes in critical code paths. Evaluations from OpenAI, Google, Anthropic, AWS, and others show a consistent pattern: agents can dramatically accelerate development, but only when used inside the right workflows, with the right expectations.

By reading this article, you will understand exactly how AI agents work, where they outperform humans, where they fail, how they integrate with traditional tools, and how to use them effectively without compromising quality, safety, or long-term maintainability.

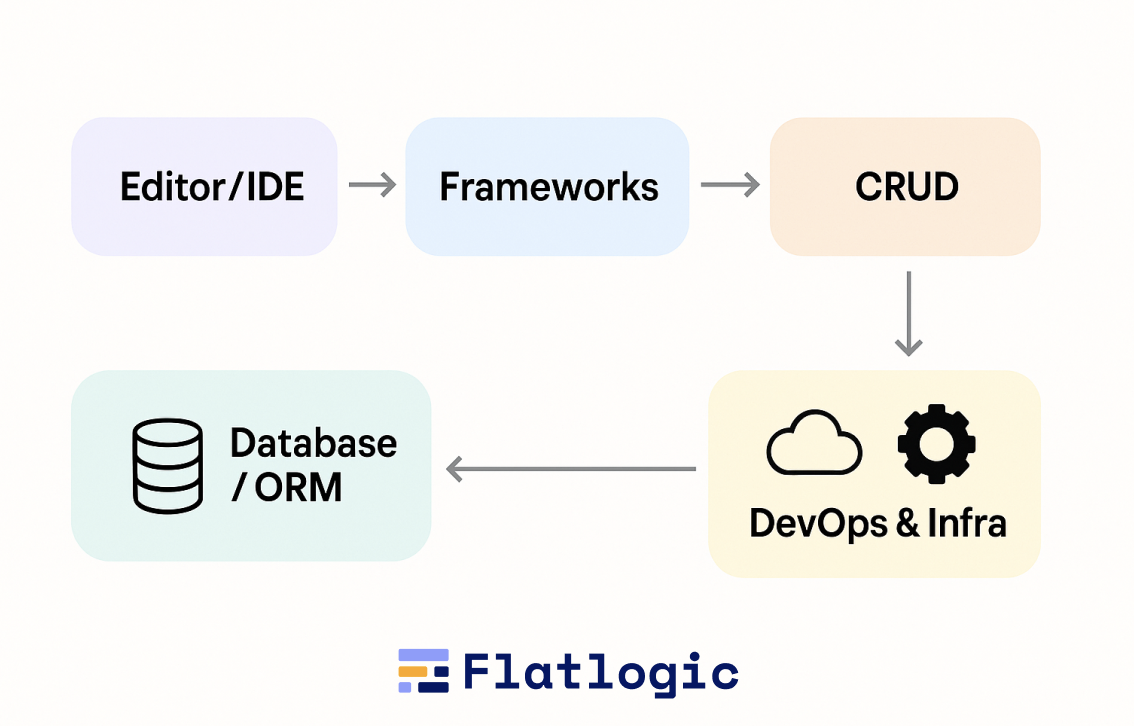

Traditional Dev Tools: The Baseline

Before we talk about AI agents, it’s important to understand the foundation they’re disrupting, not replacing, but accelerating. For the past decade, web app development has relied on a predictable but heavy workflow built around manual coordination of tools, frameworks, and infrastructure. This workflow is powerful, reliable, and well-understood… but also slow, repetitive, and expensive.

What “Traditional Development” Actually Looks Like

A typical engineering setup, whether a startup or an enterprise, relies on a stack like this:

- Editor / IDE

- Frameworks

- Package & Build Tools

- Database + ORM

- DevOps & Infra

These tools are individually powerful, but in combination, they create cognitive load. A senior engineer juggles:

- architecture decisions

- schema design

- CRUD boilerplate

- data validation

- routing

- form wiring

- API contracts

- migration scripts

- component layouts

- error handling

- devops configs

- deployments

Every piece requires manual typing, wiring, and checking.

The Hidden Cost: Everything Starts From Zero

Even for the simplest CRUD app, the traditional workflow requires:

- Initializing a repo

- Scaffolding frontend + backend

- Setting up auth and roles

- Creating the data model

- Writing migrations

- Generating API routes

- Implementing controllers/services

- Building tables, forms, and detail screens

- Adding validation, pagination, and sorting

- Deploying to staging

- Debugging and fixing integration issues

Every new project starts with technically trivial but time-consuming steps.

This is why many developers joke:

“Building an app is 20% building features… and 80% wiring everything so they don’t break.”

Why This Model Worked (for a While)

Traditional development dominated because it offered:

- Full control over the codebase

- Predictability, tools behave as expected

- A huge ecosystem of libraries, frameworks, and best practices

- Clear separation of responsibilities within teams

- Strong debugging and testing workflows

These are still valuable today. Agents haven’t replaced this foundation, they stand on top of it.

Where the Model Started to Crack

By 2023-2024, the engineering world hit a wall:

- Bootstrapping new apps became slower relative to business expectations.

- Developers spent disproportionate time on boilerplate, not innovation.

- Frontend/back-end duplication (models, types, validations) felt wasteful.

- Product teams struggled with iteration speed.

- Hiring became expensive and was bottlenecked by senior-level expertise.

- Framework complexity grew faster than developer bandwidth.

- Even simple features required multiple layers of changes across the stack.

Every CTO knew the truth:

“Our frameworks are powerful, but we’re buried under the glue code.”

The Gap AI Agents Step Into

Agents don’t replace traditional dev tools, they operate them.

But understanding the baseline helps explain the shift:

- Traditional tools assume a human drives every step.

- Agents assume the human sets the goal, and the machine performs the steps.

The entire hype around agents exists because the traditional model, although solid, has become too slow and too costly for the pace of modern product development.

What Are AI Agents in 2025? What’s the Hype?

AI “agents” in 2025 sit at the intersection of two trends:

- Large language models are becoming far more reliable in reasoning and multi-step tasks, and

- development tools exposing structured interfaces (IDEs, CLIs, repos, CI pipelines) that agents can operate directly.

But before we get into the hype, let’s make the definition brutally clear, because today the term is thrown around so loosely it borders on meaningless.

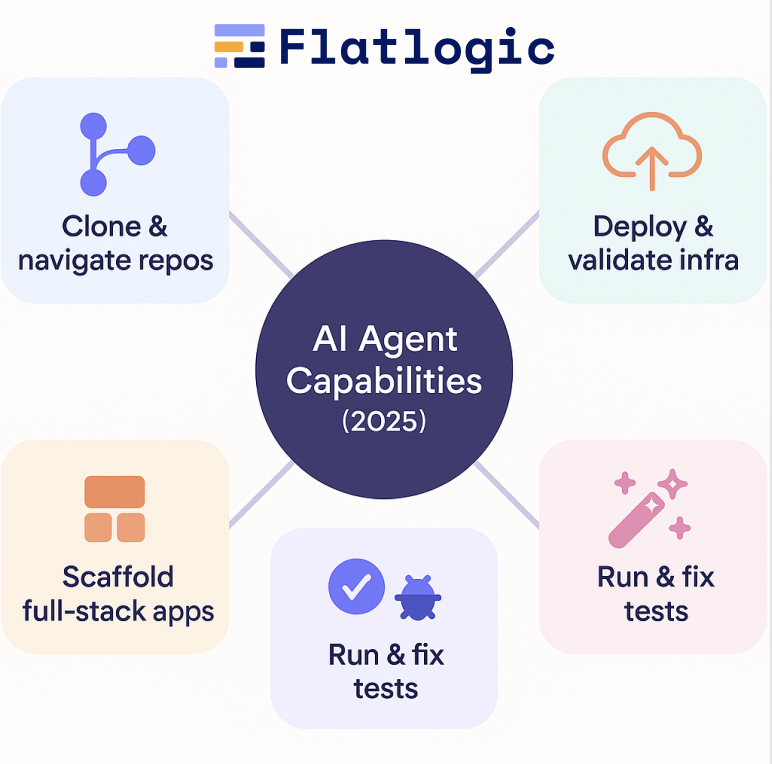

The No-BS Definition: What an AI Agent Actually Is

In 2025, an AI software development agent is:

A system powered by an LLM that can plan, execute, verify, and iterate on actions across your codebase or development environment using real tools, not just generate text.

That means an AI agent must have four capabilities:

- Planning – breaking a high-level goal (“add subscription billing”) into a graph of actionable tasks.

- Tool Use – running commands (Git, npm, Docker), interacting with IDEs or repos, querying APIs, reading logs, executing tests.

- Long-Term State – remembering prior steps, interpreting results, and keeping context about the project as it evolves.

- Feedback Loops – fixing its own errors by rereading test results, compiler errors, or runtime logs.

This is what distinguishes an agent from a chat model that simply spits out a code snippet. If something cannot run tools, check results, and adjust its own plan, it’s not an agent. It’s autocomplete with better PR.

So, Why the Hype?

Because for the first time, these systems can do work that looks like real software engineering:

- They can clone repos and navigate file structures like a junior dev.

- They can scaffold entire full-stack apps from text descriptions.

- They can run test suites and fix failures without human intervention.

- They can search through thousands of lines of code and apply systematic changes.

- They can deploy to cloud environments, generate containers, and validate deployments.

And unlike the ChatGPT era (2023-2024), where developers had to babysit the model line-by-line, 2025 agents can operate in autonomous loops inside controlled sandboxes.

AI Agents vs Traditional Dev Tools Comparison Table

| Dimension | Traditional Dev Tools | AI Agents (2025) |

| Who drives the workflow? | Human developers manually orchestrate every step. | Consistent, deterministic patterns, when paired with generators, enable agents to refactor across repositories. |

| Speed of initial development | Slow: days/weeks to scaffold full-stack apps. | Fast: minutes/hours to generate a functional base app. |

| Handling of boilerplate (CRUD, forms, migrations) | Manual, repetitive, error-prone. | Automated: generated from schema + intent extraction. |

| Code quality consistency | Depends on developer’s skill; style drift happens over time. | Consistent, deterministic patterns when paired with generators; agents refactor across repos. |

| Cross-file reasoning | Developer must mentally track everything; easy to miss details. | Agents read the entire repo, search relationships, and apply systematic changes. |

| Refactoring | Tedious, risky on large codebases. | Agents excel at mechanical, wide-scope refactors using type systems & tests. |

| Debugging | Manual log reading, trial/error, stepping through code. | Autonomous loops: run tests → read errors → attempt fixes. |

| Integration with tools (Git, Docker, CI/CD) | Developer executes commands, configures pipelines manually. | Agents call tools directly, edit configs, and re-run pipelines. |

| Creating new features | Requires the developer to update models, controllers, UI, tests, and infra. | Agent generates changes across layers from a single instruction. |

| Architecture decisions | Strong, human-led; requires deep context and domain understanding. | Weak: agents follow patterns but still rely on humans for architectural choices. |

| Understanding business logic | Strong: humans interpret domain needs, edge cases, and constraints. | Limited: agents need explicit instructions; prone to semantic gaps. |

| Error handling & edge cases | Developer responsibility; often added late. | Agents handle common cases; domain-specific cases still require humans. |

| Security & compliance | Humans ensure secure patterns; audits are needed. | Agents can implement known patterns but require strict guardrails & review. |

| Learning curve/onboarding | New devs need time to understand the codebase. | Agents instantly search, summarize, and navigate entire repos. |

| Scalability of team output | Linear: add more engineers → more output (until coordination slows). | Leverages compounding: agents + humans scale output disproportionately. |

| Predictability of output | High-code is deterministic but slow to produce. | Medium-fast but requires verification, tests, and human review. |

| Best use cases | Complex architectures, critical logic, performance-sensitive systems. | CRUD-heavy apps, internal tools, SaaS scaffolding, refactoring, repetitive tasks. |

| Overall value proposition | Maximum control, slower speed. | Maximum velocity requires guardrails. |

Traditional development tools give teams full control and predictable, deterministic output, but at the cost of speed, repetitive effort, and high cognitive overhead. AI agents flip this model: they dramatically accelerate scaffolding, CRUD, refactoring, and cross-file changes by orchestrating the same tools that humans traditionally drive. They are not replacements for architecture, domain expertise, or product thinking, but they are powerful accelerators for everything that is structured, mechanical, or repeated. The winning strategy in 2025 isn’t choosing between agents and traditional tools. It’s combining them: humans own the why and what, agents handle the how, and together they deliver software at a velocity that neither could achieve alone.

Best 5+ AI Agents: Where AI Agents and Traditional Dev Actually Work Together

The market is full of “AI app builders” that promise magic and deliver prototypes held together with duct tape. But a small subset of tools actually blend agentic automation with real, maintainable engineering practices, repos, frameworks, CI/CD, databases, Docker, and cloud environments that developers already trust.

This is the category worth paying attention to: tools that don’t try to replace software engineering, but compress the first 50-70% of the development lifecycle, letting developers take over when the work becomes architectural, strategic, or domain-specific. Below is a curated list of platforms that genuinely embody this hybrid model.

AppWizzy

A professional AI-driven development platform that gives every user a dedicated real VM with Node/LAMP/Python stacks, Git, Docker, and full Linux environments. Agents operate inside your VM like a junior developer-running commands, editing code, installing packages, generating migrations, debugging, and deploying.

Why it’s unique:

- Not a sandbox-real infrastructure with full root-level control

- AI agents run actual commands (npm, composer, git, docker, tests, etc.)

- Apps run as exportable Git repos using standard frameworks (Next.js, Laravel, Python Flask/FastAPI, etc.)

- Built-in deployments, logs, terminals, SSH, and databases

- Perfect mix of classical dev workflow + agent automation

Best for:

Founders, software teams, and engineers who want production-ready bases + real engineering control, not prototypes.

Lovable

A popular prompt-to-app builder that turns natural language descriptions into full-stack applications. It uses LLM reasoning to infer schema, data models, routes, and UI structure.

Key qualities:

- Fastest “idea → working MVP” flow on the market

- Intuitive conversational agent for app edits

- GitHub export with readable, human-oriented code

- Strong focus on frontend polish and usability

Where it excels:

Building SaaS prototypes, dashboards, CRUD apps, and web tools in hours-not weeks.

Bolt.new

A high-speed builder for React/Next.js projects with a strong editing agent built into the UI. Bolt is excellent at generating modern, clean component structures.

Key qualities:

- Agent rewrites your code incrementally and consistently

- Clean React code output using idiomatic patterns

- Great for UI-heavy projects, design systems, and landing pages

- Fast iteration loops; minimal cognitive load

Best for:

Teams building front-end heavy apps, dashboards, or marketing tools.

v0.dev (by Vercel)

An AI-powered UI generator that outputs high-quality React components built on Vercel + shadcn/ui + Tailwind.

Key qualities:

- Extremely consistent UI generation

- Perfect alignment with modern frontend best practices

- Easy export into real Next.js projects

- Works well with human-led backend development

Best for:

Teams that want AI-generated UI, but manual control over logic, APIs, and architecture.

GitHub Copilot Workspace

A task-planning agent integrated into GitHub: You describe an issue → the agent creates a plan → implements changes → verifies via tests → opens a PR.

Why it matters:

- Reads the entire repo

- Proposes multi-step plans

- Executes changes across modules and folders

- Very strong with refactors, bug fixes, and incremental features

Best for:

Established teams with mature codebases that want agents to take issues off the backlog.

Replit Agents

Replit’s agent can read your files, make multi-step changes, run the code, and fix errors until the result works.

Key qualities:

- Great for small full-stack apps

- Super fast iteration

- Beginner-friendly but still powerful

- Works well for prototypes, internal tools, and solo developers

Best for:

Lightweight projects where speed > architecture.

Google Antigravity – Agentic Dev for Cloud Workflows

Google’s agent environment for Workspace and Cloud. Designed for real-world cloud workflows rather than demo theatrics.

Key qualities:

- Strong at multi-step planning

- Understands cloud resources, configs, and CI/CD pipelines

- Good at debugging backend logic and deployments

- Designed for large-scale development teams

Best for:

Developers working inside the Google ecosystem or teams who need agent-driven cloud automation.

AWS Kiro – Enterprise-Grade Agent Inside the IDE

Amazon’s agentic IDE assistant that executes commands, inspects logs, edits code, and navigates your AWS environment.

Key qualities:

- Production-grade safety constraints

- Multi-step autonomous debugging

- Deep integration with AWS services

- Strong for operational and backend-heavy projects

Best for:

Enterprise teams that want AI automation without abandoning AWS best practices.

Comparison Table: Top Agentic App Builders (2025)

| Tool | Type | Agent Capabilities | Code Ownership | Tech Stack | Best For | Key Strength | Main Limitation |

| AppWizzy | Full agentic builder + real VM dev environment | Runs commands on real VM, edits repos, installs packages, generates migrations, deploys, refactors | Full Git repo ownership | Next.js, Node, Python, LAMP | Production-ready apps, SaaS MVPs, internal tools | Full-stack generation + real infra + developer control | Requires basic engineering literacy (not for total beginners) |

| Lovable | Prompt-to-app builder | Schema inference, full-stack generation, conversational editing | GitHub export | React/Next.js + lightweight backends | Fast MVPs and CRUD apps | Very fast and intuitive idea → app flow | Weaker long-term maintainability & backend depth |

| Bolt.new | Frontend-heavy agent builder | Component generation, UI refactoring, code edits | Exportable | React / Next.js | UI-heavy dashboards & web apps | Clean UI generation with tight iteration loops | Limited backend automation |

| v0.dev (Vercel) | AI UI generator | Generates components, sections, and forms from prompts | Exportable | React (shadcn/ui + Tailwind + Next.js) | Modern UIs, landing pages, and dashboards | Highest-quality AI-generated UI | Not a full app builder; backend still manual |

| GitHub Copilot Workspace | Repo-based agent environment | Task planning, multi-step coding, test-running, PR creation | Full repo (your GitHub) | Any | Teams with existing codebases | Strongest for incremental changes & refactors | Not for scaffolding new apps |

| Replit Agents | Lightweight agentic runtime | Runs code, fixes errors, modifies files | Exportable | JS, Python, small stacks | Quick prototypes, solo developers | Fastest iteration for small apps | Not ideal for complex architectures |

| Google Antigravity | Cloud/IDE agent environment | Multi-step planning, cloud debugging, CI/CD edits | Full infra + code | Any (GCP ecosystem) | Cloud-native teams | Strong for infra + backend reasoning | Early-stage workflows are still evolving |

| AWS Kiro | Enterprise-grade IDE agent | Executes commands, inspects logs, handles deployments | Full repo & AWS resources | Any (AWS ecosystem) | Enterprise teams on AWS | Strong safety, reliability, and deep AWS integration | Less beginner-friendly; tied to AWS |

How to Choose the Best AI Agent for You

Picking the right AI agent isn’t about hype-it’s about finding the tool that matches your workflow, skill level, and long-term needs. Use these five quick filters:

1. Define Your Goal

- Build a full app fast? → Choose a builder (AppWizzy, Lovable).

- Modify an existing repo? → Choose a repo-based agent (Copilot Workspace).

- Generate UI? → Choose a UI agent (v0.dev, Bolt.new).

2. Decide How Much Control You Need

If you want full Git repo ownership, real frameworks, Docker, and manual editing later, avoid tools that hide code or force proprietary runtimes.

3. Match the Tool to Your Skill Level

- Beginner-friendly: Lovable, Replit Agents

- Mid-level: Bolt.new, v0.dev

- Professional-grade: AppWizzy, AWS Kiro, Google Antigravity

4. Check Stack Compatibility

Choose agents that generate code in stacks you already use (Next.js, Node, LAMP, Python, Laravel, etc.). AI won’t save you from a stack mismatch.

5. Validate Error Handling

Good agents can run commands, tests, logs, and fix their own mistakes. If an agent only generates code once and stops on errors, skip it.

Conclusion

AI agents aren’t replacing developers, they’re reshaping where developers spend their time. Traditional tools still provide the stability, control, and predictability modern engineering relies on, but agents finally remove the repetitive glue work that has slowed teams for decades. The real breakthrough isn’t “AI writes your entire app.” It’s that AI now handles the boring 50-70%, while humans focus on product logic, architecture, and innovation.

If you want to experience the hybrid model in its strongest form, AI agents operating real infrastructure, real repos, real commands, and real stacks, you can try AppWizzy, which combines agentic automation with full developer control and production-ready output.

As we’ve seen throughout this article, the winning strategy in 2025 is not choosing between AI agents and traditional tools, it’s blending them. Teams that adopt this hybrid workflow ship faster, make fewer mistakes, and scale engineering effort far beyond headcount. Agents amplify human capability; they don’t replace it.

The future of software development belongs to teams that know how to use both: developers who understand the “why,” and agents that execute the “how.”

Comments