TL;DR

- Prompt engineering is durable: treat prompts as specs, not chats

- Clarity beats brevity: define users, data, roles, scope, and constraints

- Research shows explicit steps and constrained outputs boost reliability

- Pick tools by fit: AppWizzy (prod full-stack), Lovable (UI MVPs), Bolt (flows), Replit (code-first)

- Mature teams version, test, and reuse prompts for predictability

Fact Box

- Poorly designed prompts increase hallucinations, reduce factual accuracy, and make outputs non-deterministic.

- Explicit, scoped, structured instructions and step-by-step reasoning improve model accuracy and reliability.

- If you don’t define the system, the AI will invent one for you.

- AppWizzy targets production-ready full-stack apps, emphasizing structure, data models, auth, roles, and deployment.

- Lovable suits fast UI-heavy MVPs; Bolt centers on process flows; Replit is a code-first cloud dev environment with AI.

If you’ve ever felt that prompt engineering is either mystical nonsense or a shallow trick that will soon disappear, read this to the end. You’ll walk away with a mental model that actually scales as models evolve.

When people search for Prompt Engineering Basics, they’re usually asking themselves a few quiet but uncomfortable questions:

- Why does the same model give wildly different results to different people?

- Is prompt engineering a real skill or just temporary folklore?

- How do I move from random prompting to repeatable, reliable outcomes?

- Will this still matter when models get smarter?

As Andrew Ng famously put it: “AI is the new electricity. And like electricity, knowing how to wire it properly matters far more than merely having access to it.

The problem is real, measurable, and already studied. Research consistently shows that instruction framing, context ordering, and constraint clarity significantly affect model output quality and reliability. Papers such as “Pre-train, Prompt, and Predict” and “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models” demonstrate that prompting is not cosmetic. It directly shapes reasoning paths and error rates. In applied settings, poorly designed prompts increase hallucinations, reduce factual accuracy, and make outputs non-deterministic, which is fatal for production systems.

By reading this article, you will understand:

- Why prompt engineering exists as a discipline (not a hack).

- How to think about prompts as interfaces, not text tricks.

- The core principles that survive model upgrades.

- And how to design prompts that are testable, reusable, and production-grade.

What is Generative AI?

Generative AI changed software creation faster than almost any technology before it. What once required teams of engineers, months of work, and thousands of dollars can now start with a single text box. You describe what you want, and an application appears. At least, that’s the promise.

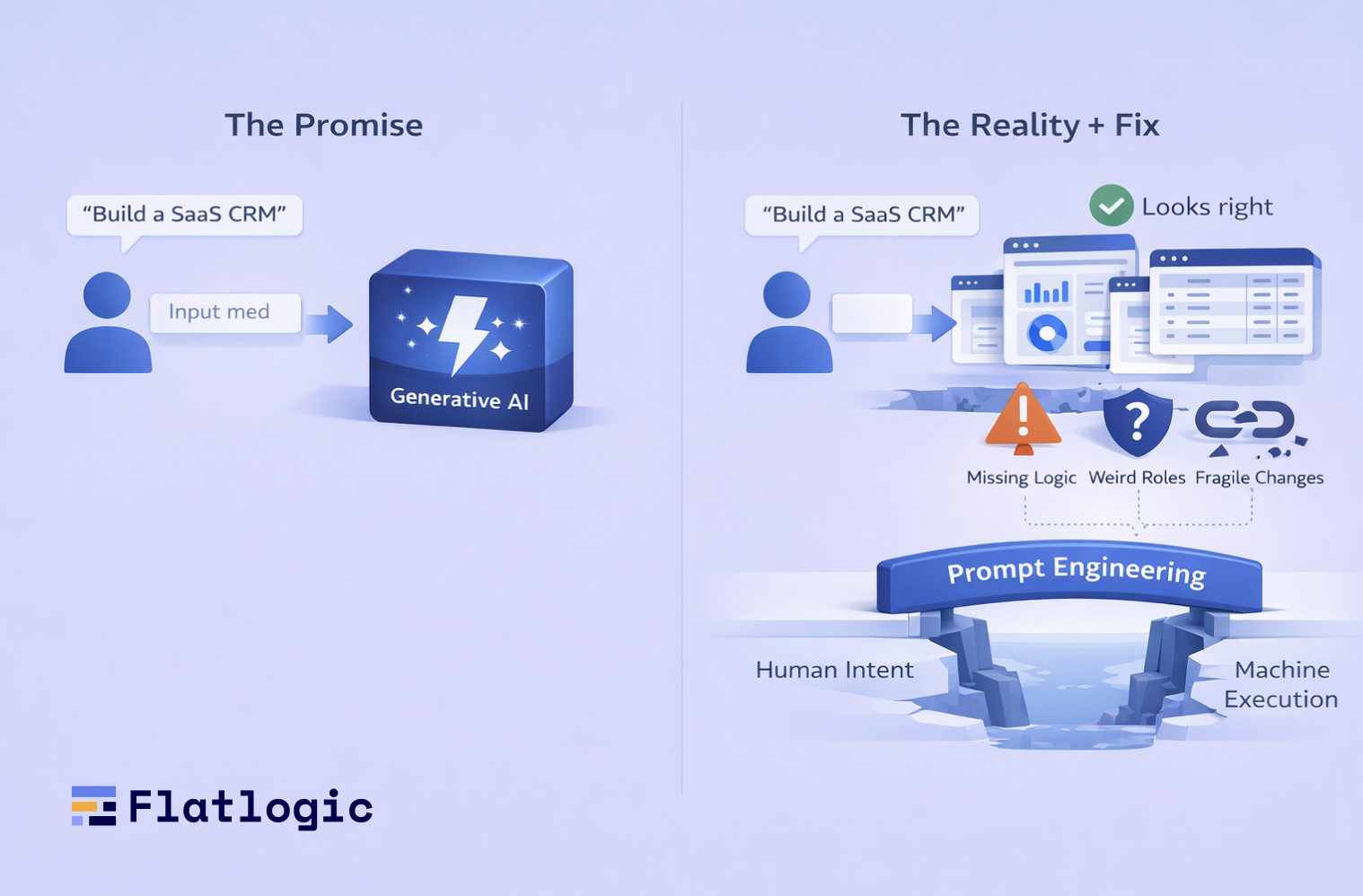

For many people, the first experience feels magical. You type something like build a SaaS CRM, press enter, and suddenly, there are pages, forms, and dashboards. But very quickly, the magic fades. The app looks right, but doesn’t behave right. Important logic is missing. Roles don’t make sense. Small changes break unrelated things. You regenerate again and again, hoping the next attempt will somehow be better.

This frustration is extremely common and very misunderstood. Most people assume the AI is unreliable, immature, or overhyped. In reality, what’s failing is the communication layer between human intent and machine execution. Prompt engineering exists to fix exactly that.

Why generative AI feels inconsistent

Humans are excellent at filling in gaps. When someone says build a CRM, we automatically assume users, permissions, pipelines, contacts, notes, and reports. We don’t consciously list them because we’ve seen similar software many times before.

AI does not share that context.

When an AI model receives an instruction, it does not understand it the way a human does. It predicts the most likely continuation based on patterns. If information is missing, it guesses. Those guesses are not random, but they are not your guesses. Once the system commits to those assumptions, everything downstream depends on them.

This is why the same tool can feel brilliant one moment and useless the next. The AI is consistent. The inputs are not.

Prompt engineering is simply the discipline of removing ambiguity before it turns into bad assumptions.

The shift from configuration to conversation

Traditional software tools force a structure. You can’t create a database table without defining fields. You can’t add a user role without specifying permissions. This friction is annoying, but it prevents misunderstandings.

Generative AI tools remove that friction. Instead of configuration screens, you get a text box. Instead of schemas, you get language. This feels liberating, but it also means you are now responsible for clarity.

Tools like AppWizzy, Lovable, Bolt, and Replit don’t impose much structure upfront. They expect you to provide it through words.

Prompt engineering is what happens when people realize that just describing it is not enough.

What research quietly confirmed

Long before prompt engineering became a buzzword, research in natural language processing pointed in the same direction. Studies showed that models perform significantly better when instructions are explicit, scoped, and structured. Asking a model to reason step by step improves accuracy. Constraining output formats reduces hallucinations. Defining roles changes tone, vocabulary, and decision-making.

In simple terms, AI performs better when you tell it exactly what job it is doing and under what rules.

This means prompting is not a workaround for weak models. It is a control mechanism for the powerful ones. As models improve, they become more expressive, not less ambiguous. That makes good prompting more important over time, not obsolete.

The biggest mistake: describing ideas instead of systems

Most failed prompts are not wrong. They’re incomplete. They describe an idea, not a system.

An idea sounds like this: I want an app that helps teams manage work.

A system sounds like this: Users belong to teams, teams have projects, projects have tasks, tasks move through states, and permissions differ by role.

AI can build systems. It struggles with vibes.

When you don’t specify:

- Who the users are

- How do they relate to each other

- What data exists

- What actions are allowed

- What is explicitly out of scope

The AI invents those answers. That invention is where unpredictability comes from.

Prompt engineering is the skill of turning ideas into systems using plain language.

A better mental model for prompting

A helpful way to think about prompting is this:

You are not chatting with AI.

You are writing a temporary specification.

Imagine you are briefing a very fast junior developer. They know many patterns. They work instantly. But they do not know your business, your priorities, or what you consider obvious. If you leave something unspecified, they won’t ask. They’ll decide.

Good prompts are not poetic. They are boring, explicit, and constrained. That’s why they work.

AppWizzy

AppWizzy is designed to generate full-stack web applications that are meant to actually run in production. It’s not just about UI mockups or demos. It focuses on structure, data models, authentication, roles, and deployment.

This makes AppWizzy a good fit for founders, startups, and teams building SaaS products, internal tools, CRMs, ERPs, or management systems. It assumes you care about ownership, extensibility, and long-term use.

Because of that, AppWizzy rewards clarity and punishes vagueness. If you describe the system well, the results are predictable. If you don’t, the AI will make structural decisions for you.

A weak prompt might say:

Build an inventory app.A strong prompt explains the system:

Build a SaaS inventory management web application. Users must sign up and belong to a company. There are two roles: Admin and Staff. Admins can manage users, products, and settings.Staff can view products and update stock quantities. Products have name, SKU, barcode, price, and quantity.Show a dashboard with low-stock alerts.No public access. Authentication required.This prompt works because nothing essential is left implicit. The AI does not need to guess relationships, permissions, or scope.

Lovable

Lovable focuses on speed and visual output. It’s great for early-stage ideas, demos, and UI-heavy MVPs where you want something clickable quickly.

Lovable is a good fit for non-technical founders, designers, and product managers who want to validate an idea or show a concept. It is less suited for complex backend logic unless that logic is spelled out very clearly.

The key to prompting Lovable well is to focus on flows and boundaries. If you don’t define what the app should not do, Lovable may add unnecessary complexity.

A prompt that works well looks like this:

Create a simple CRM dashboard UI. Pages include login, dashboard, leads list, and lead details.Leads have a name, email, status, and notes.Status options are New, Contacted, Won, Lost.No billing, no integrations, no automation.Here, constraints are just as important as features. By limiting scope, you reduce the chance of unexpected behavior.

Bolt.new

Bolt is oriented around processes rather than pages. It’s well-suited for task management, approvals, internal operations tools, and systems where the order of actions matters.

Bolt works best when prompts describe sequences instead of static features. If you explain how users move through the system step by step, the AI has far less room to misinterpret intent.

A strong prompt for Bolt might look like this:

Build a task management application with the following flow.Users sign up and create a workspace.Inside a workspace, users create projects.Projects contain tasks.Tasks move through the Todo, In Progress, and Done states.Workspace owners can manage members. Members can manage tasks only.The explicit ordering here prevents the AI from inventing alternative structures.

Replit

Replit is different from the others. It’s not just an app builder, it’s a full cloud development environment enhanced with AI. Replit is best for developers, technical founders, and hackathon builders who are comfortable thinking in terms of code and architecture.

When prompting Replit, you should describe the technical structure as well as functionality. Replit expects a higher level of technical intent.

A good prompt might be:

Create a full-stack web application using Node.js and PostgreSQL.Implement user authentication.Add CRUD functionality for projects and tasks.Expose a REST API.Keep the code simple and readable.Here, you are guiding implementation choices, not just outcomes.

Bad prompts vs. Better prompts

One of the fastest ways to improve prompting is to look at rewrites.

Bad:

Build a marketplace app.Better:

Build a simple marketplace where sellers list fixed-price products and buyers place orders. No bidding, no chat, no reviews.Bad:

Create a dashboard.Better:

Create a dashboard that shows total users, active users this week, and new signups per day.Each rewrite removes ambiguity. Each removed ambiguity increases predictability.

Why short prompts usually fail

Short prompts feel elegant, but elegance is not the goal. Control is. Every missing detail forces the AI to choose. Every choice creates a branch. The more branches, the more unpredictable the result. This is why longer prompts often produce better results, not because they are long, but because they leave less room for interpretation. The goal is not verbosity. The goal is completeness.

Prompt engineering over time

Prompt engineering is not a one-time activity. Good prompts evolve. Most teams start with:

- vague prompts

- Lots of regeneration

- frustration

Then they move toward:

- structured prompts

- saved templates

- predictable results

Eventually, prompts become part of the product. They are versioned, tested, and reused. At that point, AI stops feeling like a toy and starts feeling like infrastructure.

The rule that never changes

No matter which tool you use, one rule always applies: If you don’t define the system, the AI will invent one for you.

Sometimes you’ll get lucky. Most of the time, you won’t. Prompt engineering is not hype. It is a basic communication discipline for probabilistic systems. Learn it, and generative AI becomes leverage. Ignore it, and it becomes noise. As AI tools grow more powerful, this skill becomes more important, not less.

Conclusion: Prompting Is a Skill That Compounds

Prompt engineering isn’t mystical, and it’s not a temporary phase that disappears once models get “smart enough.” It exists for the same reason specifications, schemas, and APIs exist: powerful systems need clear instructions. Generative AI didn’t remove that need it amplified it.

Once you stop treating prompts like casual conversation and start treating them like interfaces, results become predictable. Iteration becomes incremental instead of destructive. AI stops feeling like a slot machine and starts behaving like infrastructure. The mental model you’ve just learned describes systems, not vibes; remove ambiguity early; constrain before you generate will keep working as models evolve.

If you want to actually apply this instead of just understanding it in theory, use tools that reward clear prompting rather than hide mistakes behind magic. Flatlogic is built for generating real, production-ready SaaS and business apps where structure, ownership, and long-term use matter. AppWizzy gives you a fast path from a well-written prompt to a running full-stack app with hosting included.

No hype, no tricks. Clear prompts in → real software out.

Comments