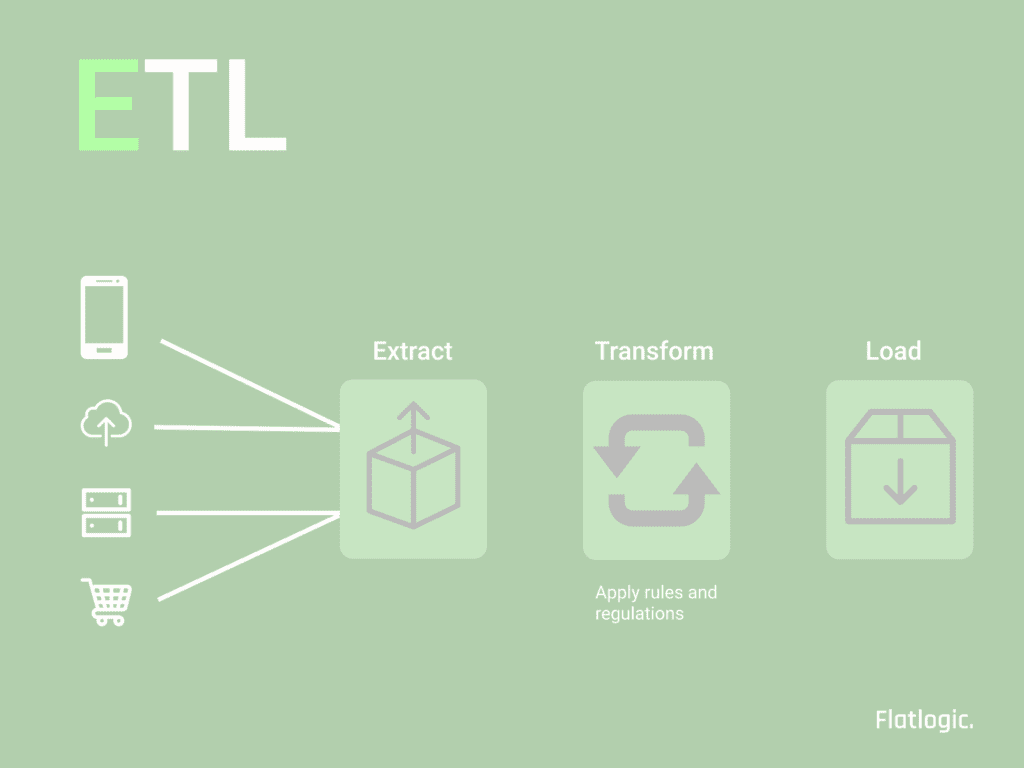

ETL process basics

ETL (Extract, Transform, Load) is a well-known architecture pattern whose popularity has been growing recently with the growth of data-driven applications as well as data-centric architectures and frameworks. They say “data is the new oil”, so just like with oil, it’s not enough to find it, you will need to invest a lot in its extraction, processing, transportation, storage, final product distribution, etc. In simple words, if your system is going to move data from one or more sources, change its format in some way, make it available via some data management system, and do it repeatedly then you can be sure you are going to develop an ETL system even if you don’t call it that way. This article will get you familiar with issues, challenges, known solutions, hacks, and best practices applied in the world of the modern ETL process.

Issues and challenges

When building an ETL system you are likely to run into a combination of issues that depend on the nature of your data. Here is the list of some of the challenges you may face when developing your data processing stages: Extract, Transform and Load.

Extract

Availability of data sources

The more complex and valuable your data is, the higher are the chances it will be scattered across numerous sources and presented in various formats. In case the source data is accumulated in a structured data storage like PostgreSQL or MongoDB and the source owner belongs to your organization, direct access via query language interface and DB driver could be an option. However, in the real world, granting direct access to the database service is rarely the case as it imposes high-security risks and might generate breaches and vulnerabilities. On the other hand, getting direct access to a source DB is overkill as we need to read the data and any writing is out of the ETL functionality scope. Therefore the most efficient and hence popular way to access source data is to create an HTTP endpoint that will feed the data in a structured format like XML, JSON, or CSV. It is highly recommended to follow REST protocol principles when designing your endpoint. A less efficient yet viable option is storing data in a file and publishing it on a web server. In such a case it would make sense to compress files before publishing, e.g. in ZIP format.

The above situation when the data is available in a structured format is unfortunately rarely the case, especially since the data source ownership is outside the scope of your organization. The data would be most likely intended for humans, not ETL agents, and presented in HTML format as a traditional browsable website. This is where the concept of scraping comes into play. Of course, implementing an HTTP downloader and parser from scratch would be a lame idea since many great open source solutions are out there at your service. For instance, Scrapy, a Python-based web scraping & crawling solution. As modern websites are often one-page web apps rather than collections of static HTML documents, browsing and getting access to separate pages might be even more challenging and require full emulation of user behavior. In this case browser emulator Selenium could be helpful, a robust solution, having a long history of evolution and implementations in multiple programming languages including but not limited to Java and Python.

When creating a scraper or spider, it is very important to keep in mind the legal aspects of the matter. Data privacy and intellectual property ownership questions are often far from being trivial and are still intensively disputed on all authority levels. So it might be a good idea to consider doing the following before diving into that stormy ocean of open data:

1.Contact the data source owner and ask about the availability of the official API endpoints or permission to scrape the data. In many cases, the solution might be easier than it seems. Link exchange, mentioning the data owner or revenue sharing could be good motivators to share the data with you. Anyway, sharing is caring.

2. Read the Terms of use and Data privacy pages carefully, answers to many of your questions might be found there, also keep in mind that those pages have real legal importance.

3.Do some research into your local data privacy laws and google the latest news on legal cases related to the use of public data, especially data scraping and crawling.

4.Look into the structure of the source you are going to scrape. If it has specific means of protection against scraping agents such as captcha, then it’s not a good idea to scan such sources automatically.

Generally speaking, data scraping is not illegal but is subject to numerous regulations and needs to be done with caution and ethics in mind.

Dynamic nature of data

Nothing is stable in this changing world and data is one of the least stable things. The information which is relevant and true at the moment could become history in a few minutes. Good examples of such rapid changes would be pricing information, market availability, user status to mention a few. In terms of ETL architecture, this means that the more dynamic source data is the more often it needs to be stored and re-processed. Generally, you would find the following approaches to the dynamic data problem:

1. Notification service. The data source notifies its users about the changes by either posting an event code to the subscriber endpoint or posting the full information about which data object has changed and what exactly the changes are. In some cases, the data changes would be posted not straight away but at certain intervals in batches, typically daily.

2.Snapshot publishing service. This might be a practical solution for data with dynamic nature where keeping track of the change history is important. A file containing either a full data snapshot or diff log is published daily, file name typically containing the date and timestamp.

3. The above two approaches where the data owner actively notifies the clients on the changes or publishes the changes at certain intervals are a dream service for the data consumers as it saves tons of resources and time. Unfortunately, in most cases, this is too good to be true and the data consumers have to take the burden of tracking the changes themselves. The most obvious way to do it is by pinging the data sources over and over again and comparing the latest data to the previously scanned snapshot. Slow, inefficient, expensive for both serving and consuming parties, yet oftentimes inevitable. The source scanning process can become the starting point of the whole ETL pipeline. The process execution schedule can be configured as a simple Cron job or by a more sophisticated pipeline scheduling system such as Apache Airflow or AWS Glue.

A few life hacks for scraping dynamic data:

1.Typical data presentation on a website is a combination of the list page and detailed item page available upon click. Try to limit your scans to list pages, and trigger the details page scan only if item change has been detected. Sometimes it works, not always, unfortunately.

2.Not all the data scanned from the data source will be ever used on your system. Therefore for some data items, so-called lazy processing can be applied: you check the data item for changes only upon demand by the user. Of course, this lifehack has limited applicability as well.

Transform

Heterogeneous nature of data

There are many (if not infinite) ways to model and present the same data which creates yet another challenge for the data consumers, especially for those who aggregate the data from multiple sources related to one business domain. In this case, we recommend sticking to the following strategy:

1. Design a unified universal format for the incoming data. It doesn’t have to be the final load-ready format but rather the intermediate presentation which will be conveyed into the transform stage.

2.Design and develop several data adaptors transforming the incoming raw data into the standard form. Such preliminary conversions could contain unification of address, company and country names, date and time formats, number and digit presentation, etc. It doesn’t matter whether this pre-formatting stage belongs to the extract or transform part of your ETL flow, but it is important to do this unification before the business logic driven transformations are applied, the data is standardized as much as possible. In other words, we advise the following practice: first transform on micro (format) level, then proceed to macro (business) level transformations. Such an approach will allow you to plug in more data sources and adaptors when needed and decouple the transform stage from the extract stage.

3. Document the unified data formats properly as the transform logic might be implemented by different engineers. If for some reason you will need to change the data presentation standard in the future, the format documentation will be used as the single source of truth for all teams, e.g. when refactoring the data adaptors or changing the data transfer interface between the extract and transform stages.

Data enrichment

Data transformations are not always about changing the presentation format, entity normalization, or decomposition. In many data-centric services, the ultimate product value is derived from combining different kinds of data, possibly from various data sources, often combining proprietary data with public data. For instance, it could be combining product specification data with pricing data, demographic data with economic data, etc.

Data linking

It could remind you of the utopian concept of Linked Data popular in the 2000s, where every piece of data had its unambiguous unique presentation and identification. In the real world linking pieces of heterogeneous data is likely to generate a bunch of challenges. Solving most of them boils down to creating a standardized presentation and appropriate adaptors. You can reuse our recommendations from the previous paragraph related to data format diversity.

Adding metadata: classification and tagging

Another type of data enrichment that can bring additional value to your data is classification. It can be helpful for navigation in data visualization systems or used as one of the criteria in data analysis, following the divide and conquer principle. Several approaches can be applied to adding class information to your data: a predefined classes list can be presented as a separate entity, each class having a dedicated identifier. Another good practice is to organize the list of classes as a hierarchy also known as taxonomy. Depending on the nature of your data, items can be assigned one exclusive class or many optional classes. Another popular way to classification is adding a list of text labels or tags to your data items, it is generally more flexible both in terms of processing and storage, although it should be used with caution as the list of tags can grow uncontrollably creating duplicates, overlaps, and other discrepancies. Managing the metadata data layer or your ETL system is a complex non-trivial task that requires constant revisions and refactoring of class taxonomies and tag lists, it makes a lot of sense to have a dedicated metadata owner in your data engineering team who keeps track of all the changes and syncs up all the parts of a system that depend on metadata.

Named entity recognition

Another type of data enrichment is related to adding names of people, companies, countries, addresses, and other geo information to your data. It is commonly known under the term Named Entity Recognition (NER). It is a huge topic that can’t be covered within the scope of this article. When you face the necessity to integrate NER into your ETL system, you will most likely have to make a few decisions:

- Create an in-house built NER or take an open-source or commercial system. NER functionality is often provided as a part of Natural Language Processing (NLP) packages. A good practice is to test the system on the same data that you will feed into it later on in the production environment. The test runs on generic text samples can often be misleading. Another important aspect of a NER system is the ability to control the lists of named entities and configure the recognition rules. Using the system with preset configuration as a black box is a high chance to bump into serious issues down the road.

- Use a rule-based recognition system or machine learning/neural networks approach. This decision strongly depends on the number of named entities you are dealing with and the diversity of their presentation formats. Our general recommendation is to use a machine learning-based NER system, preferably a cloud-based SaaS, if your text information is standard and generic. When dealing with non-generic texts, such as specific types of contracts, bank forms, etc. it might be a good idea to go for the rule-based approach.

Quality metrics

In all the non-trivial data transformations and enrichment such as classification, tagging, and named entity recognition, processing quality is the key (although the processing speed can be critical in some cases). You can rarely expect 100% output quality even given the input data is crystal clean (of course it’s not). In complex cases, the 60-70% output quality can be considered a fairly good result. The major data processing quality metrics are recall and precision. A recall is the percent of entities you were able to recognize correctly out of all entities present in the input text. Precision is the percent of correctly recognized entities out of all entities recognized by the system in the input text. In-text processing and classification tasks there is traditionally a trade-off between precision and recall, which is quite obvious: the more entities you try to recognize, the less strict recognition rules and models you will have to apply, therefore mining more garbage along with the valuable findings.

Human-in-the-loop

In case the data quality is critical, and the automated solution can only deliver a certain level of precision and recall, human intervention is often the only option. In the context of an ETL process pipeline, a human-in-the-loop pattern can be an efficient solution. It means that a human expert curating some or all of the data items is treated as one of the processing blocks which can be placed somewhere in the data flow diagram, e.g. between extract and load. A good practice is to precede the manual processing stage by the decision block which measures whether the processing results for the current data item are reliable enough or require revision by a human expert. A good option to organize such a human-in-the-loop processing block is to create a database for intermediate data storage with a simple CRUD UI so that a group of human experts can pick up the incoming data items, review/fix them, and flag as processed so that the processing can continue, e.g. data items are conveyed to the load stage.

Load

The major trade-off when deciding where to load your data after it has undergone all the transformations is between relational DB (aka SQL) and some other sort of storage, commonly known as NoSQL, e.g. MongoDB or ElasticSearch. The main aspects on which such decision should be based are:

- The number of records in your data (current and potential)

- Number of new records to be added and updated daily

- Spikes in data inflow

- The complexity of the relational model

The rule of thumb is to remember two principles:

- Relational databases do not scale.

- NoSQL databases are limited in joining normalized data entities.

ETL process lifehacks

To find the golden middle, a couple of lifehacks can be applied:

- Denormalization: some data items are not fully decoupled (normalized) but are copied directly into the joined parent items. It creates duplicated data and potential maintenance issues down the road but helps with data scaling and reading latency

- Use a combination of SQL and NoSQL in your system. Store normalized business entities in SQL database and complex business entities (documents) in a NoSQL storage like Mongo. The relational storage will assure all entities are unique and unambiguous, and the document storage will be easily scaled. Some overhead data management might be required every now and then, e.g. full re-indexing of the document collection, which seems to be a fair price for the combination of link integrity and low access latency you will get.

ETL Process. Backing up

Only paranoids survive.

Thank you for reading!

Comments